You Should Not Lower Your Thermostat (To Save Energy)

2024-01-03 15:37 - Opinion

Not only is it common for thermostats to offer features to set different target temperatures at different times of day, it's commonly advised that if you (during heating season) "lower your thermostat one degree, you'll save" about 1 percent for each degree of thermostat adjustment per 8 hours or [net] 3% on your heating bill or between 1 and 3 percent of your heating bill or by regularly turning down the temperature by 10 to 15 degrees before leaving the house for an eight-hour span, you can save between 5% and 15% a year on your heating bill. Though, most of the sources I could (easily) find all cite the same Department of Energy numbers, which repeats "You can easily save energy in the winter by ... setting it lower while you're asleep or away from home" and "During winter, the lower the interior temperature, the slower the heat loss".

That last point comes closest to convincing me. Yes, it is definitely true that a lower temperature delta directly relates to a slower transfer of energy. But if it's 30° outside and 70° inside, how much can it really matter whether that delta is 40 or 38 degrees? I've doubted this since I was young, living in my parents house and not in control of the thermostat. Then I moved into apartments where I didn't control the heat. But now, I'm living in a detached home and I do control the thermostat.

Because it's programmable, it's easy to set it cooler overnight, for less heating. So I've done that. But does this really save any money? I can only dive deeper into the data I have, for the ~70 year old home I'm in. But that's at least something.

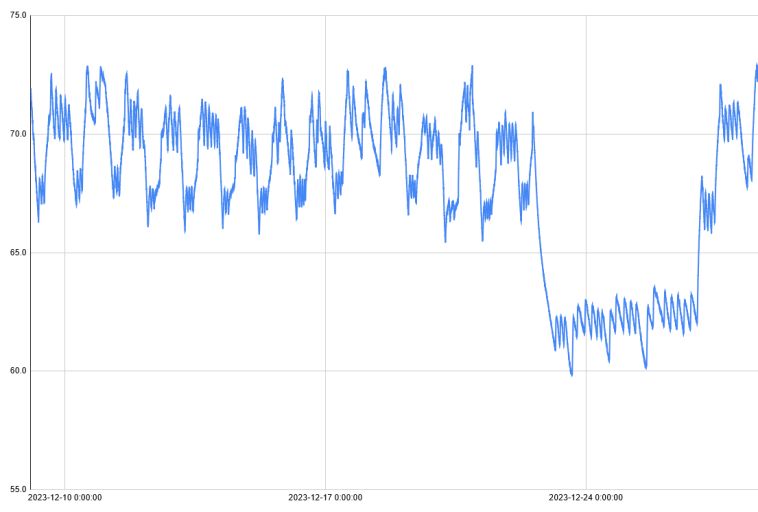

Here's the full data set I'm operating from, covering most of December 2023. Critically this includes nearly a week around Christmas. I was traveling then and set the thermostat extra low. Normally it's set to 71°, I let that drop to 67° "overnight" and all the way to 60° while I was away for a few days. (I think? The graph looks higher. It's set to 60° now...) This is generally visible in the graph above. Though all days vary widely as the weather does, the difference is clear, especially e.g. between the 21st and 22nd.

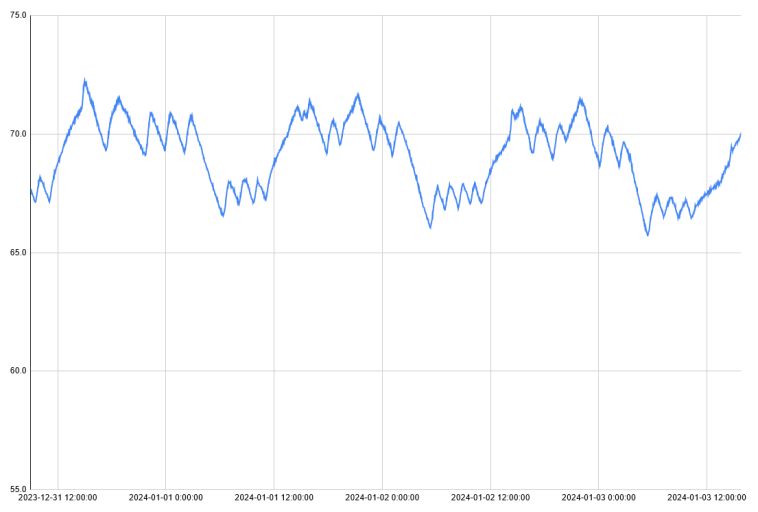

Here's a closer view of just the past couple days, and thus a better view of the topic really in question. Do I save money overall by letting the heater relax overnight? My argument (which I'll soon try to back up with data) is no: The jagged peaks and troughs while the thermostat maintains that slightly lower temperature overnight look very close to those during the day, while it maintains a higher temperature. Then yes there's a large period each evening where there's no heating going on, but a seemingly equal period in the morning as the heater works overtime to raise the temperature back up.

I let the thermostat relax starting at 10PM. (This is hard to see in the graphs because the their time zones are incorrect, whoops.) A normal heat/cool cycle can be roughly two hours, and it might have just completed at 10:00 PM, or just be about to start, or anywhere in between. Temperature has fallen below the night setting (so the furnace starts up) usually by 12:30 to 1:00 AM, where it stays until 6:00 AM when the thermostat resumes its normal daytime setting (this one, always on time because it's always well below that newly active setting). It then takes until 9:30 AM, sometimes a bit more, to satisfy the thermostat that normal daytime temperature has been reached. Very close to the same three and a half hours to fall to, and to rise from, the lower overnight setting.

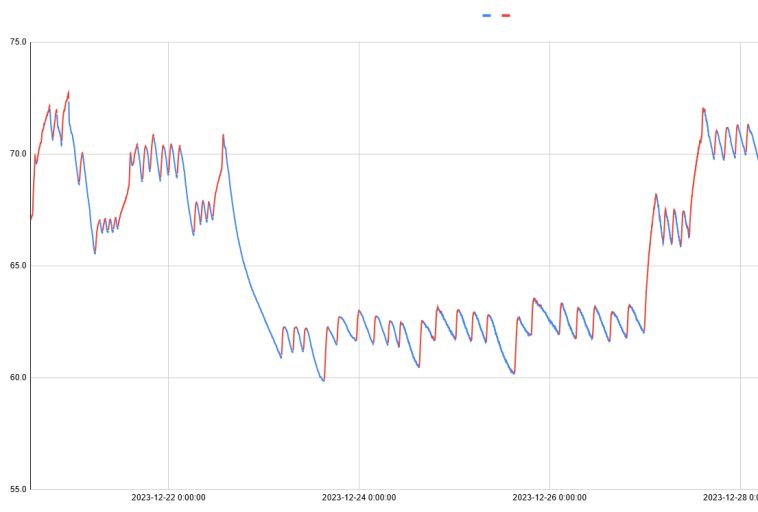

This might be the most interesting graph. I've managed to color-code it red while heating and blue while not. That's visually interesting but hard to use directly. However, I've used the "rising" and "falling" signals that controls the color of this chart as an input for further consideration.

Outside temperature is a confounding factor for such analysis. I've tried to analyze a wide enough time range to average out its effects. Also, I've applied moving averages to try to eliminate measurement noise from the result. That said, first result: there were 13,484 minutes where the temperature was rising and 20,354 where it was falling. Or: the heater was active for 39.85% of the time, perhaps call it 40%. But we'll save those significant figures because the next step is to wonder: how does that proportion vary depending on the set point of the thermostat?

I wrote some code to group these rising and falling temperature periods together, and distinguish them by the current temperature when switching from one to another. (I.e. if we were rising and are now falling, and the temperature is over 70: that's a normal daytime event. And so on.) And here's the results (time is minutes):

| Setting | Time On | Time Off | Percent On |

|---|---|---|---|

| Normal | 7,996 | 10,425 | 43.41% |

| Night | 3,931 | 5,201 | 43.05% |

| Holiday | 1,556 | 4,728 | 24.76% |

For the data above I had my little program simply look at whether the temperature was rising or falling, and whether it was closest to the normal, night, or holiday range. Then for each minute, record which bucket we fell into. (I tried other more clever-seeming things, but they made the three hour coast or burn always get recorded completely into the "wrong" bucket, throwing off the numbers. There's really no good way to record those long segments that are partially normal and partially night time.)

First: Clearly setting the thermostat down by 11° has a real effect: I spend 75% more (!) (time with the furnace on, thus) energy to heat this home to 71° as compared to 60°. (In December average temperatures were mostly in the 40s, and those days I was away were right in the typical range.) That's much more of an effect than I expected. I left early on the 22nd and returned by early evening of the 26th. Average outdoor temperatures were roughly 45° for that time. Or, the furnace had to add 15° to get to 60° vs. adding 26° to get to 71° — almost exactly a difference of 75%! It's definitely worth turning the furnace down when leaving for several days straight.

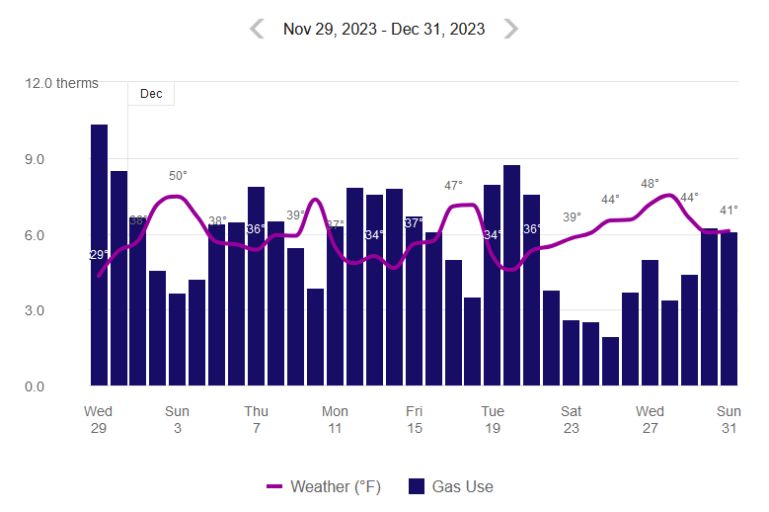

We can confirm this with usage data from the utility:

This says weather on the 15th/16th was very close to that on the 23rd/24th. But gas usage was 12.8 vs. 5.1 on those two pairs of days, respectively. An even bigger difference, though of course I wasn't using hot water or anything else while I wasn't here.

But "normal" vs. "night" results are so close as to be indistinguishable from measurement noise. For this house with this furnace, the idea of night time energy savings look just as wrong as I originally thought. I'll wager that with better insulation the inside temperature would fall slower, perhaps the furnace could stay off all night or nearly so. And might even rise faster (i.e. more efficiently) in the morning. But here, it doesn't seem to happen.

How about in theory? Starting from the same 45° we examined before: Raising the indoors up to 71° is a difference of 26°, and my relaxed setting is a difference of 22°. A theoretical difference of 18%, but not borne out by actual observed measurements. But that "average 45°" comparison only made sense when I was inspecting several whole days at the same setting. In reality, the outdoor temperature falls by much more than four degrees overnight, so I'd expect to use the heat more, then. Maybe I'm really saving roughly 20%, but also spending just as much more due to the lower night time outdoor temperature? I'd have to not drop the thermostat overnight for a while and re-run this whole analysis to try to figure that out.